Stability AI, a prominent player in the generative AI space, has released an update to their AI music generation tool called Stable Audio 2.0. This new version brings several improvements and new features that enhance the music creation capabilities of the platform.

Improved Song Coherence and Structure

One of the notable enhancements in Stable Audio 2.0 is its ability to generate songs that sound more coherent and song-like compared to the initial release. The update has improved the melody and structure of the generated tracks, making them more appealing to the listener. While the quality may not yet be on par with human-composed music, as noted by The Verge, it is a step forward in the right direction.

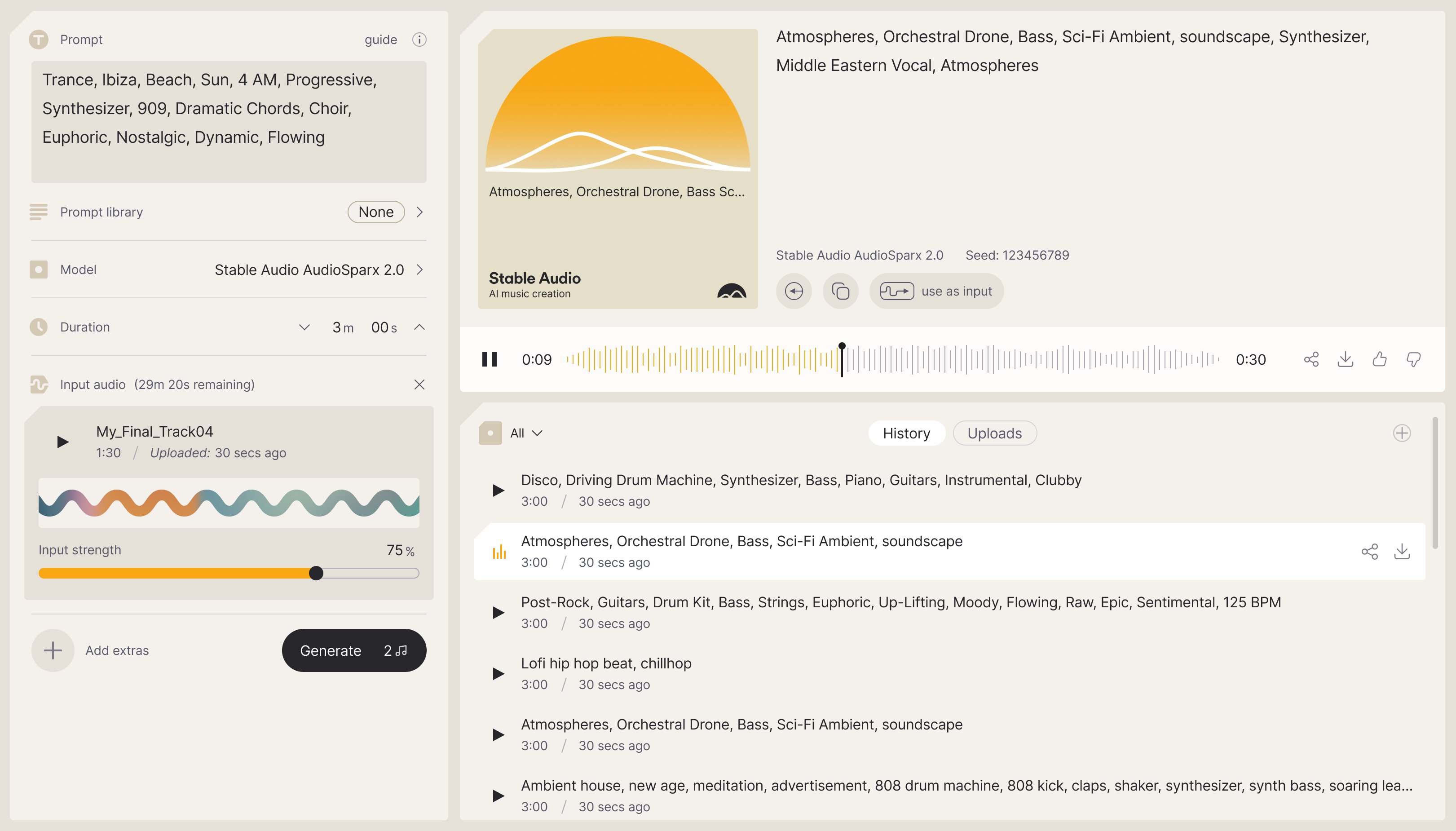

Audio-to-Audio Generation

Stable Audio 2.0 introduces a new audio-to-audio generation feature that allows users to input an existing audio clip and transform it based on a text prompt. This opens up exciting possibilities for music producers and artists to manipulate and remix existing sounds in creative ways. The "prompt strength" can be adjusted to control how closely the output follows the text prompt, giving users more control over the final result.

Limitations and Room for Improvement

Despite the advancements, Stable Audio 2.0 still has some limitations. While the generated instrumental tracks have improved, vocal synthesis is not yet supported. The model focuses primarily on creating instrumental music. Additionally, while the update shows progress, the generated songs are not quite at the level of human-composed music in terms of overall quality.

Concerns Over Training Data

As with the initial release, there are concerns about the training data used for Stable Audio 2.0. The model was trained on copyrighted music from the AudioSparx library, raising questions about fair compensation for the artists whose work was used. However, it should be noted that artists on AudioSparx were given the option to opt-out of having their music included in the training dataset.

Availability and Future Plans

Stable Audio 2.0 is currently available through a web interface, with both free and paid tiers. The free version is restricted to non-commercial use. Stability AI has expressed plans to release open-source models based on Stable Audio in the future, trained on different datasets. However, the current 2.0 version is not open-source.

Comparison to Other AI Music Tools

When compared to other prominent AI music generation models like Google's MusicLM, Meta's MusicGen, and OpenAI's Jukebox, Stable Audio 2.0 holds its own. In blind testing, MusicLM was judged to produce the most pleasant and coherent tracks, outperforming Stable Audio and MusicGen. However, Stable Audio was noted to excel in generating standalone instrument sounds and sound effects. As prompts become more complex, Stable Audio tends to produce noisier and more cacophonous tracks compared to its competitors.

In conclusion, Stable Audio 2.0 represents a significant step forward in AI music generation from Stability AI. The improvements in song coherence, structure, and the addition of audio-to-audio generation capabilities make it an exciting tool for music creators. While there is still room for improvement to match human-level composition and address concerns over training data, the progress is promising. As the field of AI music generation continues to evolve, it will be interesting to see how Stable Audio and other tools push the boundaries of what is possible.

Learn more at stableaudio.com

Ableton Move: Revolutionizing Mobile Music Production In the ever-evolving landscape of music technology...

Introduction In the world of audio recording, few pieces of equipment have left as indelible a mark as t...

Neve has just unveiled the 1073SPX-D, the world's first audio interface built around the iconic componen...